Contents

Start off with new VR Project :OPSYN(This Project), not OOPSYN(the 1st Project)

I am currently working on my next VR/XR project, OPSYN(Out of Place Synthesizer) in Berklee fellowship program. OPSYN is a Sci-Fi, Cyber Punk, immersive virtual musical Environment. It is a series of virtual sound making objects and musical instruments that a viewer interacts with to create their own sounds. I will record the progress of my project in this blog weekly. In my master project, I developed an OOPSYN (Out of Place Synthesizer) – immersive virtual musical Environment. This work has been presented in a couple of places including Espacio Fundación Telefónica.

A continuación, alumnos de @berkleevalencia presentan sus proyectos. Entre ellos, Tomokazu hioki, que presenta Oppsyn: un sintetizador que se desarrolla dentro de un entorno de ciencia ficción #XRmúsica https://t.co/lXT87Yh0hf pic.twitter.com/LKujxRQyNY

— Espacio Fundación Telefónica (@EspacioFTef) May 24, 2018

Next project is called OPSYN (same, Out of Place Synthesizer), just eliminated one O. I noticed that a native speaker will pronounce like Oops, more [u] sound and not [o] sound that I was intended to.

The reason why I’m pursuing VR music environment? The answer is simple. It’s exciting and fun! I can make what I want to see/hear. You could do anything in the virtual world even magic (Fire from hands like in a movie). Entertainment is evolving everyday and people wouldn’t be satisfied with just passively watching or listening to music since people are players by nature. They participate in a music concert or festival and find some kinds of value to watch/listen to music since it’s not the same as listening to music in the living room. But still, the live event is passive in terms of music. They could dance, do some kind of performance, but none of them are about music. I’m a composer and the most exciting moments are when I’m making music rather than listening to music. VR music environment would give the opportunities that players could enjoy participating in the music even more!

Software

As a fellow project, it is a kind of continuation of my master’s project but I want to improve it in many ways: better interaction (using leap motion), implementing audio in the game engine (WWISE, Unreal Audio Engine), and get familiar with the game engine. I’m not quite sure yet but I would like to work on my next project in Unreal Engine 4 (UE4). I have worked on some projects with Unity and I’m familiar with the engine more but I got some good reviews on unreal engine and some people who asked me to collaborate with them are using UE4. Also, I’m used to visual script language such as Max/MSP, so UE4’s blue print looks familiar to me. Both Unity and UE4 can do basically the same things, but I feel like UE4 requires less coding/scripting to do the same task and it’s more artist friendly (some artists are building games only using “blue print”, the visual script editor in UE4). You could do a lot of things with scripting in C#. C# is a great language and easier than C++, but if you want to do make your own audio plug-in, you would need to deal with the lower level language (C++) eventually. I would like to develop my own plug-in in the future and it would be good to learn C++ while learning UE4 engine. As a composer/sound designer for game/interactive media, you would need to get familiar with both Unity and UE4 anyway, so it’s a good time to start the project with the different engine. As for Audio Engine, I wanted to do everything within the game engine such as using libpd (You could implement PureData in the game engine) /Csound. However, there are not many updates recently, and they are still experimental. The goal of this project is searching the maximum possibilities in VR music experience rather than figuring out integrating the sound engine. Instead of libpd, I’ll go for a combination of Unreal Audio Engine, Max/MSP and Wwise(audio middle ware). There are a rumor that Cycling 74 (Developer of Max/MSP) are working on integrating Max/MSP in a game engine. Also, there are some companies developing the audio engine for game: Tsugi’s Game Synth or Unreal’s new audio engine. Until there are more developments in audio engine (or I have enough knowledge in C++ to contribute to Sound engine), I will try to maximize my knowledge of DSP (digital signal processing) and develop artistic vision (music composition and sound design) using the tools currently available.

OSC (Open Sound Control)

Both Unity and UE4 can do basically the same things but somehow there is more information regarding OSC in unity. OSC is a protocol for networking sound synthesizers, computers, and other multimedia devices for purposes such as musical performance or show control. This application can basically connect different application over a network. You could connect UE4 with Max/MSP or Touch OSC (iOS application). I used OSC for my first VR Project OOPSYN(Out of Place Synthesizer) and connect ableton live and Unity. I was able to use OSC in UE4.

There is a OSC plugin for UE4 you can download from here.

I followed the Video Tutorial in the link.

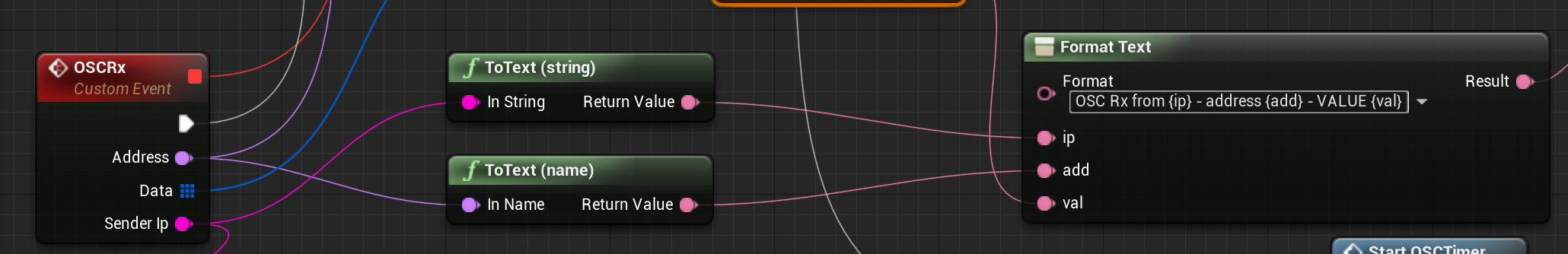

In newer version of OSC, there are a some different things in patching. UE4 does not support all automatic conversions of types anymore so you need to do it manually (Fig.1).

Fig.1 New UE4 manual conversions

In the video, he dragged off the line from address to ip directory, but you need to put a ToText between them.

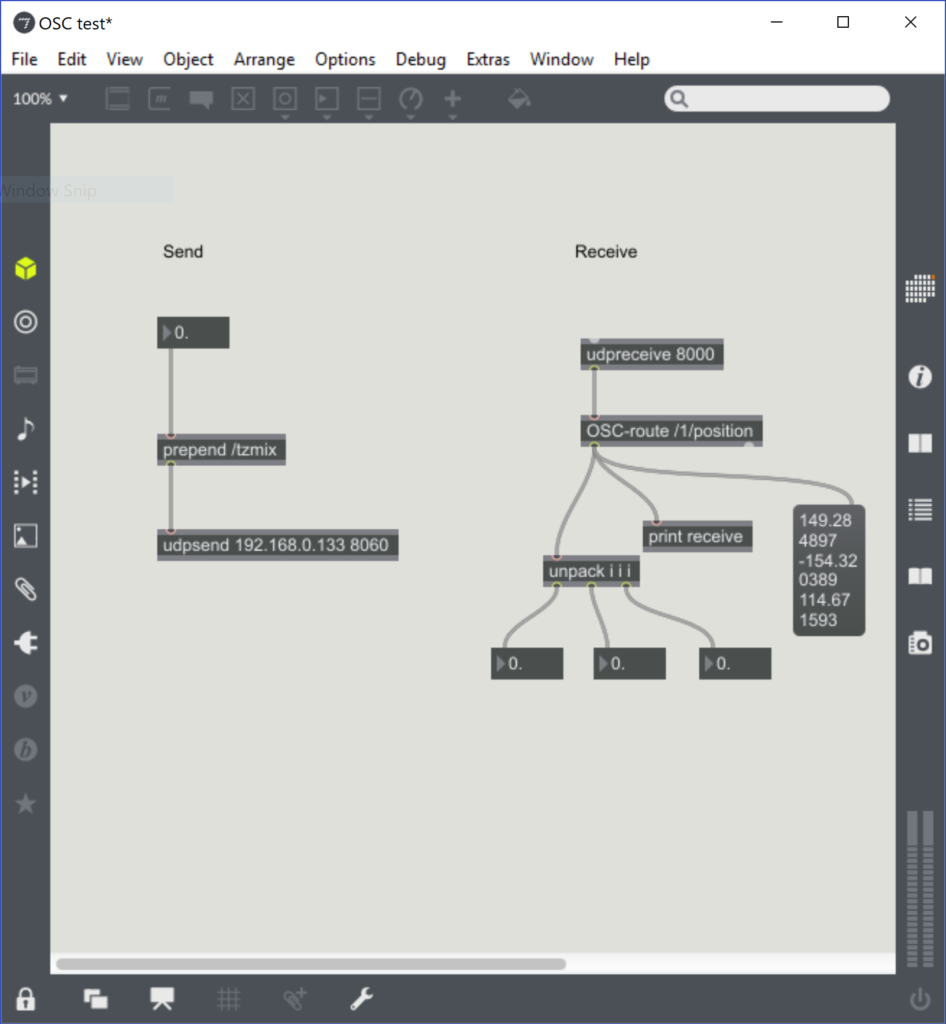

I was able to send OSC message between touch osc app, Max/MSP (fig.2), and UE4.

Fig.2 Max OSC patch example

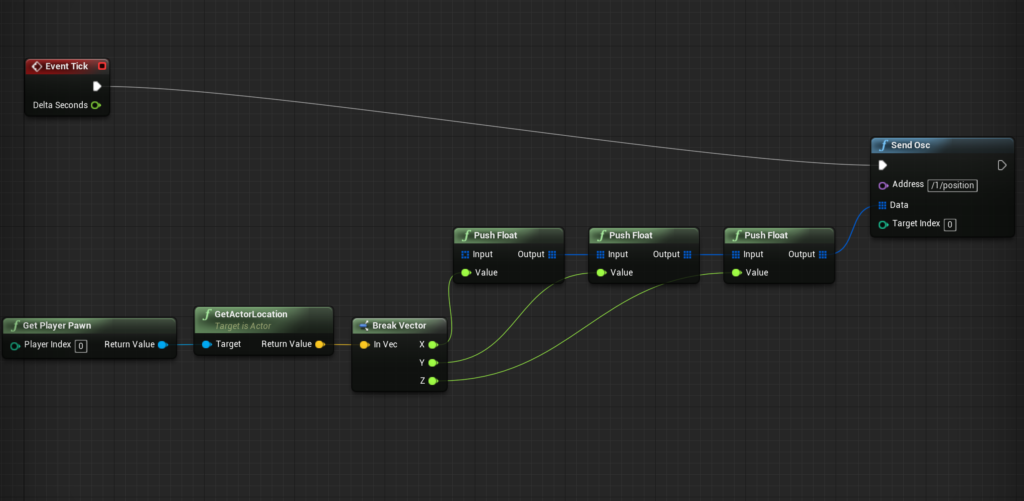

By using send OSC, I was able to send the position of the default pawn from UE4 to Max/MSP (Fig.3).

For some reason, it won’t send out the OSC message to another computer/Touch OSC (I was able to send the strings “Hello”). I’m trying to figuring it out(Although it won’t be a big problem since I would use just one computer for this project).

By Next time, I will create a git repository of this project.