OPSYN - Out of Place Synthesizer -

Project Information

Abstract

Sci-Fi, Cyberpunk

Virtual Reality Musical Environment

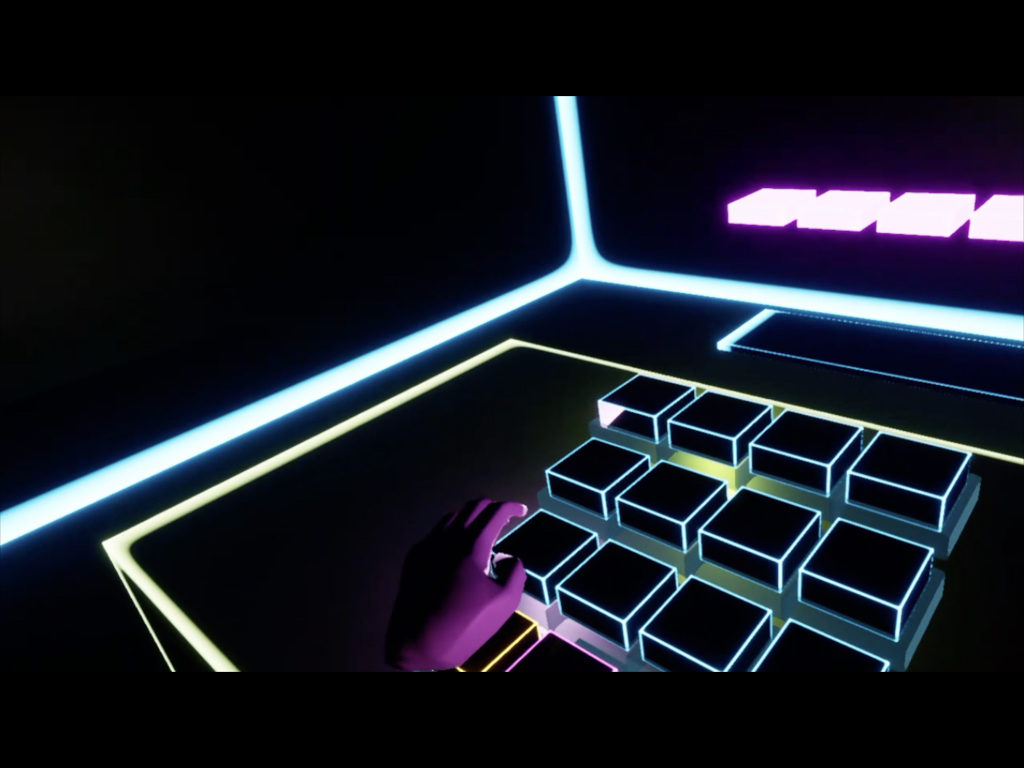

OPSYN is a Sci-Fi, Cyberpunk virtual reality musical environment and instruments (VRMIs). It is a series of virtual sound making objects and musical interfaces/instruments that a viewer interacts with to create their own sounds. Aesthetically, the virtual environment uses a Cyberpunk look. The goal of this project is to make a music studio/performance tools within a Virtual Realty (VR) and/or Augmented Realty (AR) universe where users can experience the process of making their own sound and music. VR/AR has a lot of potential such as media, and further musical contents will be created in the future.

Introduction

The Origin of OPSYN

Why I do what I do

First, I am going to talk about how I got into making a VR music environment. OPSYN was originally started as my master’s project at Berklee college of Music in Valencia, Spain. It was originally named OOPSYN (Two “O”, but the same name – Out of Place Synthesizer), and it featured a Steampunk look instead of the Cyberpunk. (The details why I changed the name is here) Below is the video of OOPSYN.

Fortunately, after the master’s degree, I was able to continue my projects under the post master’s fellowship program at the same college but this time, I moved to Boston. I had a fellowship course where I continued to research my project while working at the college and taking some classes in undergrad. This was a great opportunity since I didn’t take any game audio classes during my undergrad, and I was able to retake these courses. Furthermore, they now offer some VR related classes, which didn’t even exist when I was at the college. In the master’s degree, I needed to self-taught how to use game engine so it was really great to have a professor, Jean-Luc Cohen who is knowledgeable about game audio and immersive audio. I learned a lot from him regarding VR and Procedural Sound Design field.

Review of the state of the Art

Background Story

What inspired the OPSYN project

My academic supervisor in Valencia was Pierce Warnecke, who is a great new media artist and taught me a lot of techniques in MAX and Jitter, and one of the algorithms he implemented is still used in the new project OPSYN. Also, I was lucky to study under a lot of superb and unique professors there; DJ and modular synthesis from Nacho Marco, Music production and Recordings from Daniel Castelar, Musical direction and practice from Pablo Munguía, Ableton Live and Electronic Dance Music production from Ben Cantil, VJ and video projection from Zebbler Peter Berdovsky. All courses I learned were essential for creating OOPSYN.

Regarding the art form, these are my personal inspiration for OPSYN: Wintergatan’s Marble Machine, Martin Messier + Nicolas Bernier’s LA CHAMBRE DES MACHINES, Krzysztof Cybulski’s ACOUSTIC ADDITIVE SYNTH, and Electronic Dance Music performances with Ableton Push, Novation launch pad or DJ Tech Tools Midi Fighter used by artist such as Mad Zach, ill Gates, R!OT and more.

Originally, I was thinking making actual mechanical instruments for my master’s project, but the time was limited (the master program was only a year) and I needed to build my idea faster. The great thing about creating contents virtually is the speed of prototyping the idea and be able to create with lower cost. In addition, I could use a huge amount of assets and tools in the game engine. Furthermore, I have access to AR glasses (Thanks to Epic MegaGrants team), I will be able to expand my real music studio with the flexible virtual environment, OPSYN.

The reason why I chose Unreal Engine to develop was that I was impressed with the Unreal Engine’s graphic quality and an advisor at Berklee, Jeanine Cowen recommended Unreal Engine. Also, as an artist, Unreal Engine’s Blueprint system helped a lot to build an environment more intuitively and the visual scripting environment is familiar to me since I’ve been a Max user for a long time. It’s easier for me to organize and visually understand what’s going on at a glance compared to traditional text base coding.

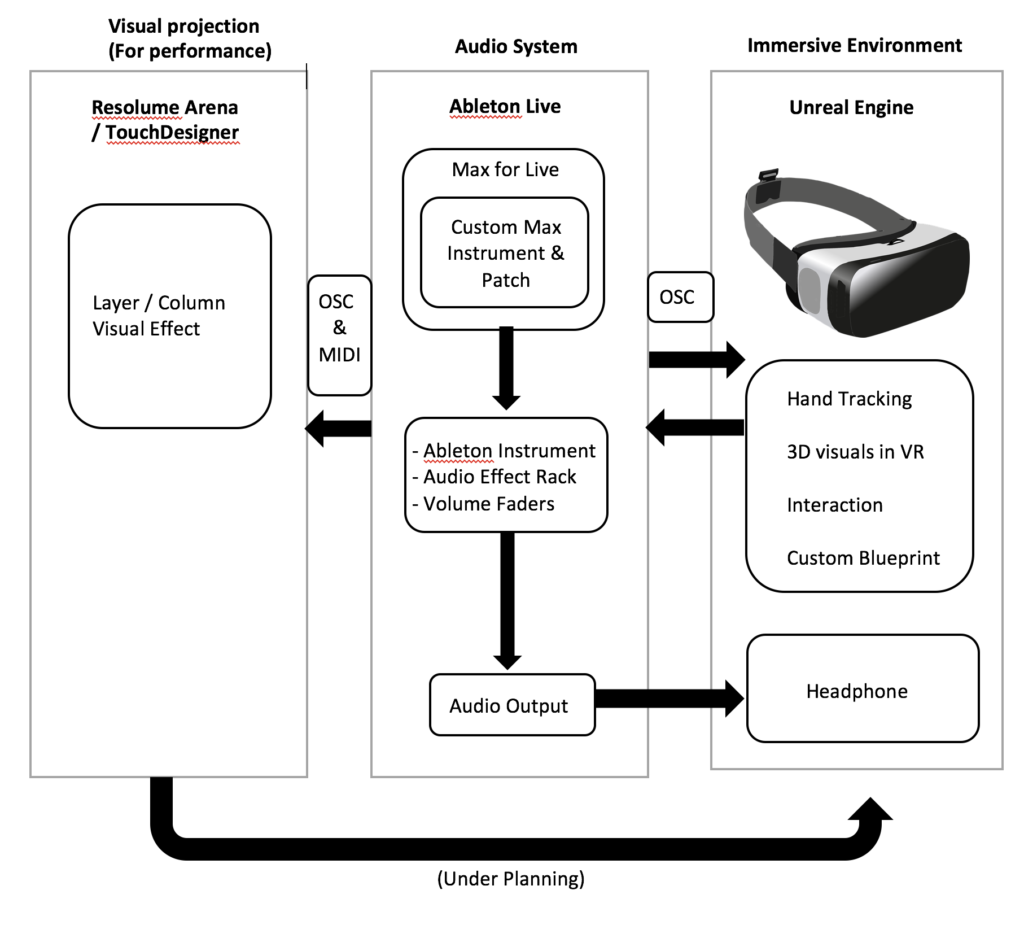

Description

OPSYN uses technologies/softwares such as Unreal Engine (Game engine) with custom blueprint, Max(also known as Max/MSP/Jitter, a visual programming language for music and multimedia) and Ableton live (a digital audio workstation) for the sound engine. OPSYN uses leap motion as the hand tracking controller. I also use Resolume Arena (VJ software) for a performance.

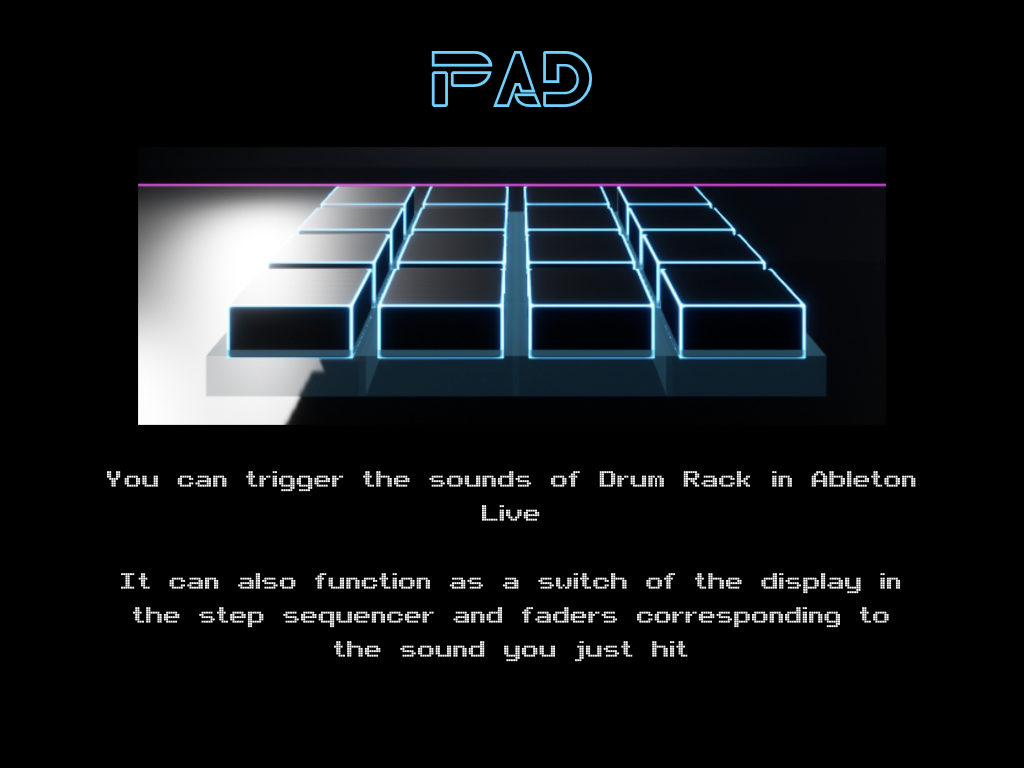

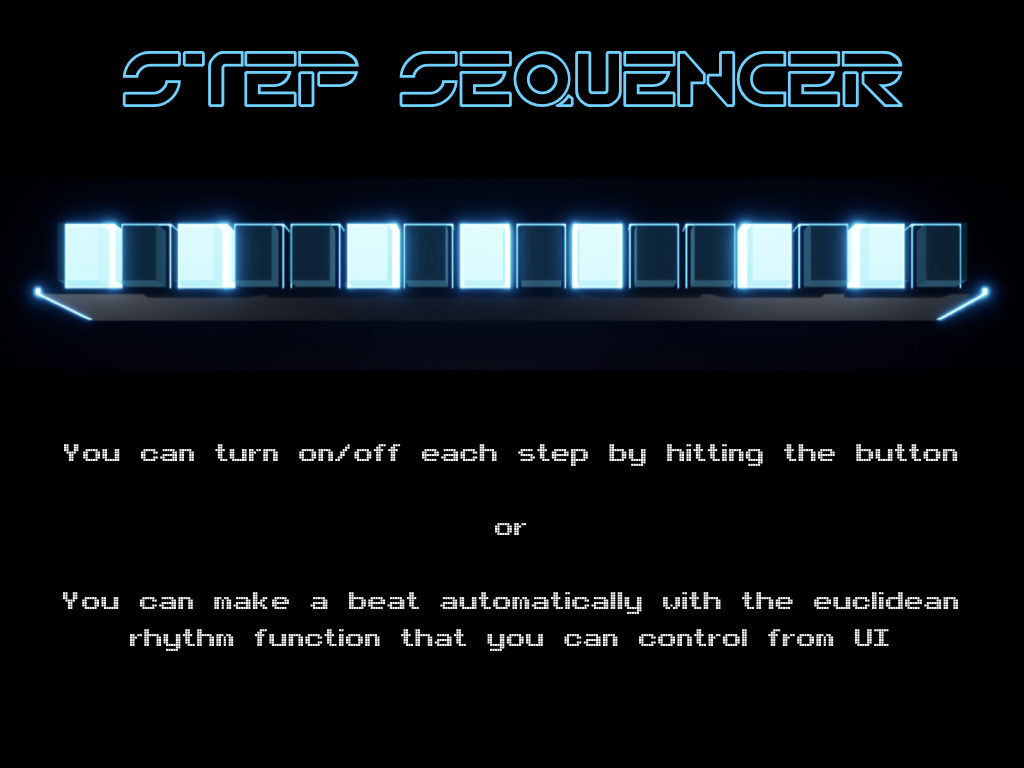

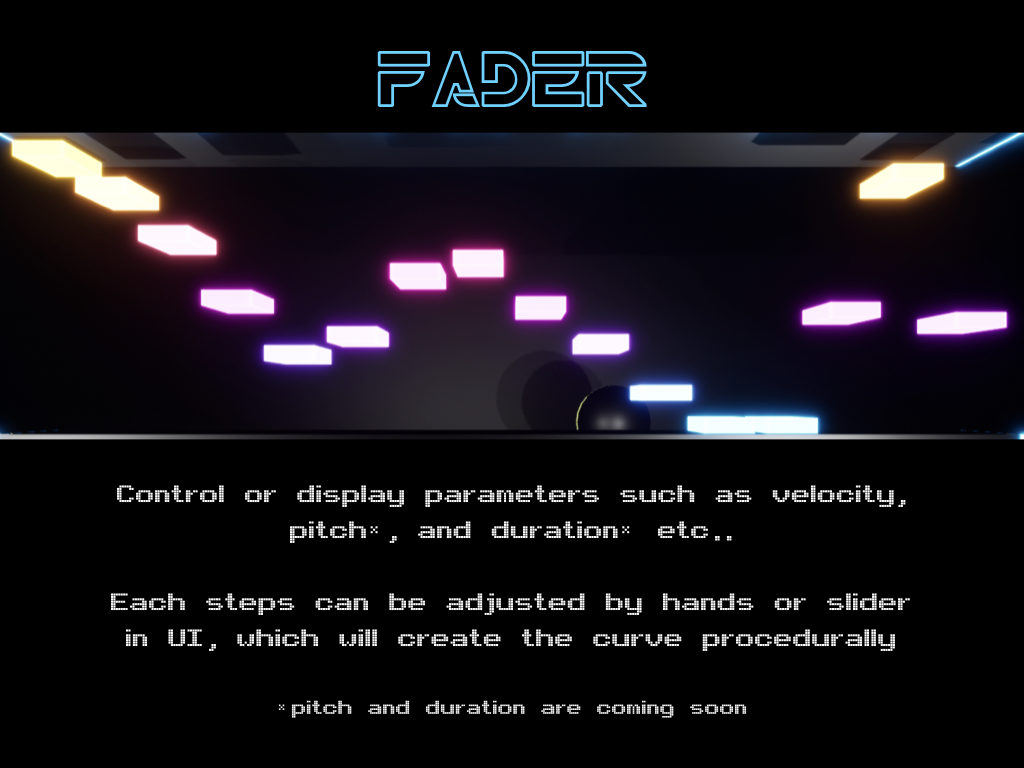

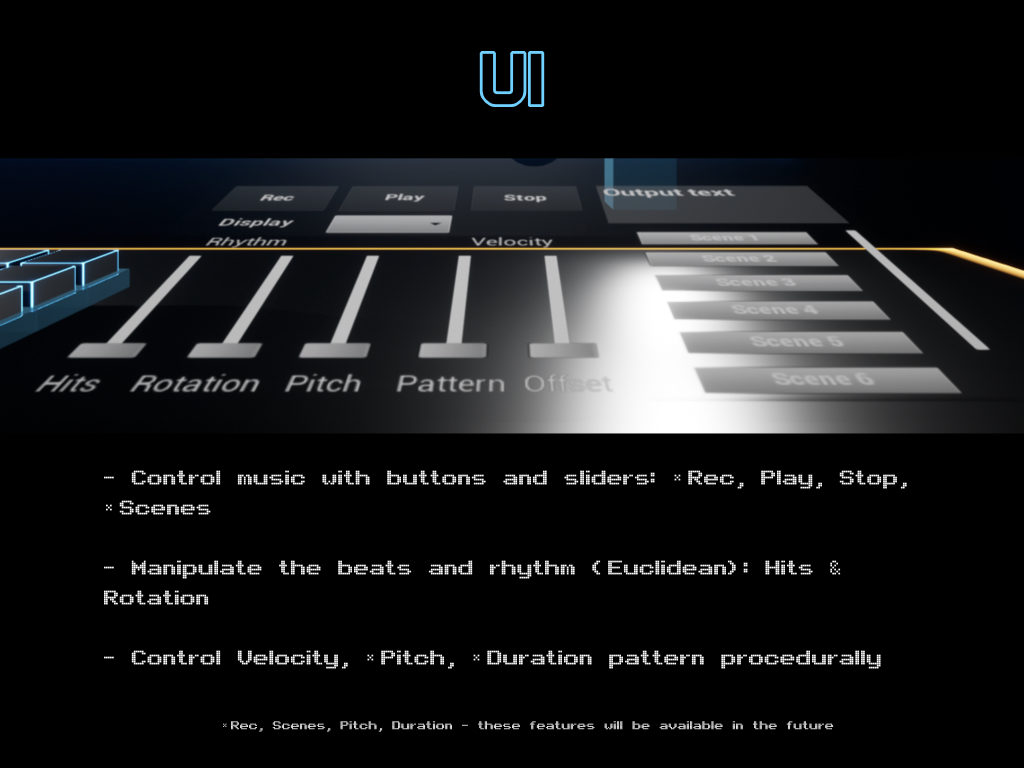

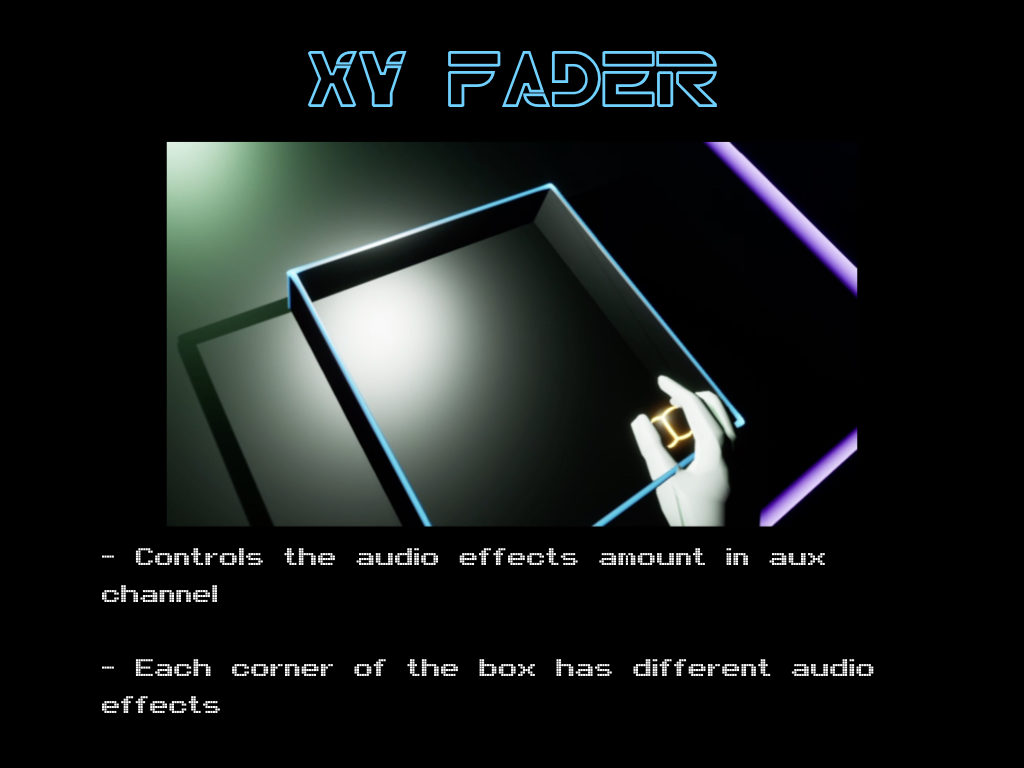

Currently OPSYN features instruments/interfaces such as PAD, STEP SEQUENCER, FADER, UI and XY FADER. As for the glow emissive material, I used Liaret’s Unreal 4 Tutorial: Advanced iron material as a reference.

Innovative Aspects

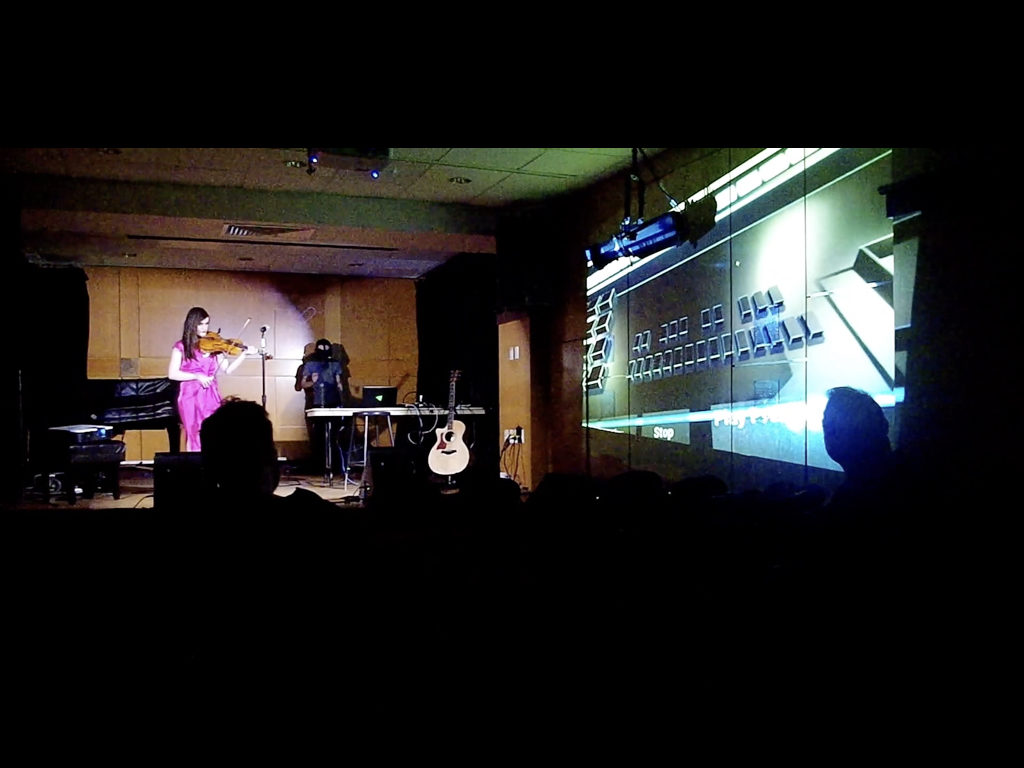

Originally, OPSYN was made for making music and I wasn’t intended to use for performance, but I had a chance to perform with another fellowship scholar, Sofija Zlatanova. She asked me to collaborate with the composition and production for her new EP and after EP was completed, we had a opportunity to perform the song together. We wanted to do something interesting and I came up with the idea to use my OPSYN on stage. I don’t think it’s common to use VR headset on stage yet, and I think this was an innovative idea. In this show, I created a video projection with Resolume Arena, which is controlled from Ableton Live via OSC & MIDI in real time, and the Ableton live is controlled with OPSYN, so there were three softwares running at the show.

Challenge, both expected and unanticipated

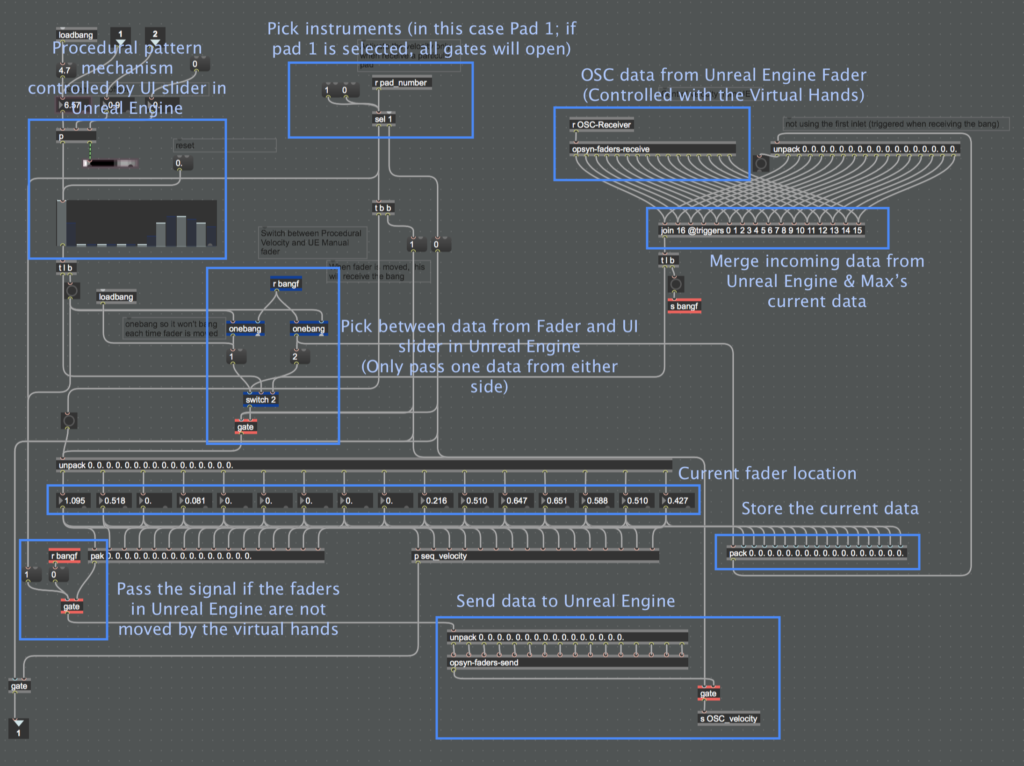

OPSYN send signals interactively between MAX and Unreal Engine. Max will control the location of the game or change the material colors in Unreal Engine based on musical information or Unreal Engine will control the musical information based on the interaction in the game engine. This was quite challenging and complicated to control since it causes the endless loop if it’s not programmed properly. Max send out the signal to Unreal Engine and change the object location while Unreal Engine is also sending out the location information to MAX. For example, there are faders in OPSYN, which control each note’s velocity and they can be moved with the virtual hands directly or slider on the UI interface in Unreal Engine and create patterns by moving the slider. The patterns are generated in the MAX side, so while sending out those patterns from MAX to Unreal Engine, we want to stop sending the location information from Unreal Engine to Max. Also, when moving each fader with the virtual hands, it does’t want to receive the signals from Max to change the location of the faders. I solved these issues with the following steps; on the Max side, when getting incoming signals from Unreal Engine, I used switch or gate to stop sending signals to Unreal Engine (see the figure below).

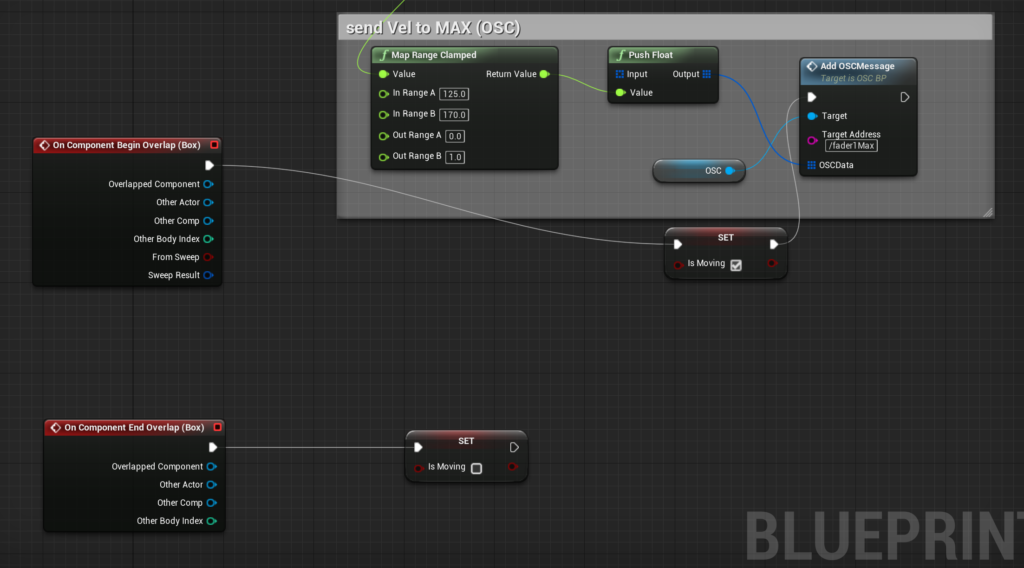

In Unreal Engine, I created a variable, which determines whether the fader is grabbed by the virtual hand. If virtual hands touch the fader, it will check “is Moving”. If the hands do not touch the material, “is Moving” will be unchecked. Thus, it will send the OSC message to Max only when the faders are touched and it functions as a kind of safety lock so it won’t send any data while it’s not touched.

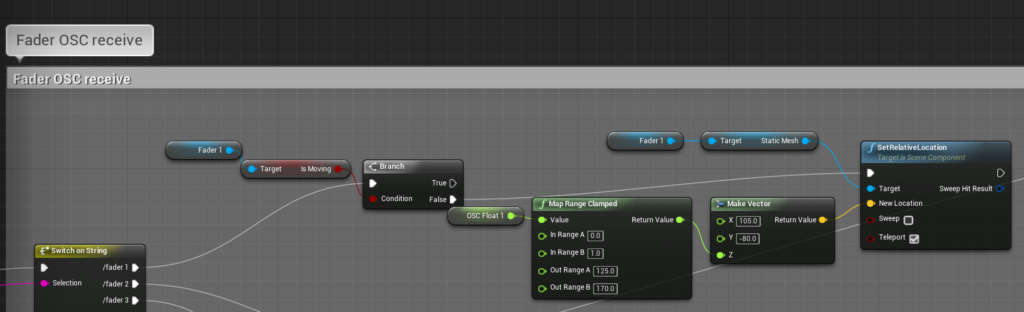

Also, in the OSC receiver side, Unreal Engine will not receive any data from Max via OSC if the faders are touched by the virtual hand in VR.

Future Ramifications

Here are list of things to do.

- Continue to post the developer blogs to my website and explain the details of the creation of OPSYN.

- Add more instruments and make the visual more interesting (implements instruments like modular synthesizers in VR environment) – I’m planning to add another instruments inspired by Ableton’s Push.

- Create more music and upload the performance videos online.

- Research & Implementation of interaction between OPSYN and the real instruments in AR glasses.

- Hand tracking in Oculus quest (As of now, hand tracking for Oculus quest does not officially support Unreal Engine, so I need to wait)

- Organize the project and research an easier way to share OPSYN publicly so anyone can experience it (Although the project is open source and downloadable in the Github)

Conclusion

Expand Aesthetics

and imagination

Using environment like game engine is not for every musician but if you are interested in challenging new things and expand your aesthetics, I think project like OPSYN is a worth try. It will impose multiple roles such as design, software development, project management, business development, overall direction and so on. Music in the real world is not separated from the other form of art or world, but everything such as physics, math, visual perception and body movement are related. The game engine is where you can explore and simulate the world and by learning these, you will expand your musical knowledge/skills as well as realizing your imagination.